Machine Learning with Python

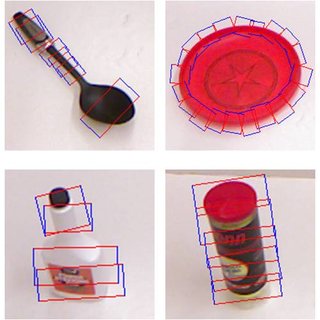

Supervised Classification

Machine learning "gives computers the ability to learn without being explicitly programmed". ML explores the study and construction of algorithms that can learn from and make predictions on data. ML is employed in a range of computing tasks where designing and programming explicit algorithms is infeasible. Background concerning Supervised Classification of data is presented in this part. This course was written with the help of Joris Guerin, Stéphane Thiery and Eric Nyiri.